News

For real, ECWolf 1.4 is almost here 2022-07-18 03:19:23

For those who don't hang out in the Matrix room, I quit my job with Fortinet and having reclaimed 8 hours a day I set a goal for myself to have ECWolf 1.4 feature complete during the interim between jobs. The good news is that goal was a success and I'm proud to announce that ECWolf 1.4 has been feature complete since June 30th with just some refinements and bug hunting to go. That means multiplayer is working in the development builds! I'm still working through a few bugs so it's not time for release just yet, but it's exciting to see the light at the end of the tunnel.

For those interested in testing, I have written some notes about the multiplayer feature.

WolfstoneExtract - Play Wolfstone 3D and Elite Hans in ECWolf 2020-07-25 17:59:09

Although it took me longer to get the final touches in, I'm pleased to announce that my Wolfstone extraction utility is complete! With this tool you can pull Wolfstone 3D out of Wolfenstein II or Elite Hans: Die Neue Ordnung out of Wolfenstein Youngblood.

For those who are not aware, these two games contain arcade machines with a parody of Wolfenstein 3D set in the alternate history world of the Machine Games Wolfenstein series. Effectively Wolfstone 3D is Wofenstein 3D with the roles, specifically in regards to symbolism, reversed. Elite Hans is a new set of 18 levels of dubious quality.

Conveniently, the developer chose to emulate the original engine down to even using the same wl6 file formats. This makes them easy to load in ECWolf or even in vanilla, but some subtle tweaks made required a few things to be added to ECWolf for the full experience. Thus once extracted the development version of ECWolf will be required to play (and it will detect the pk3 files along with the other supported games). It is important that I note that the extraction utility does only what it says, extracts the game into a form readable by ECWolf. It does not perform any alterations on the game data. The sound quality is horrible, but that's because the processing to make it sound like it was being played on an in game arcade cabinet was baked into the sounds. Not anything of my doing.

The tool can be found on the ECWolf downloads page. Binaries are available for 64-bit Windows and Linux since those are the platforms you can install Wolfsentein II and Youngblood on.

This tool has been made possible by the work of emoose (idCrypt), Adam "hcs64" Gashlin (ww2ogg), powzix (ooz), and the XeNTaX and ZenHAX community. Although I did a little bit of reversing myself, WolfstoneExtract is largely an assembly of those tools and knowledge into a nice integrated package.

Edit 2020-07-27: Realized my musicalias implementation was backwards so corrected extraction tool accordingly as 1.1.

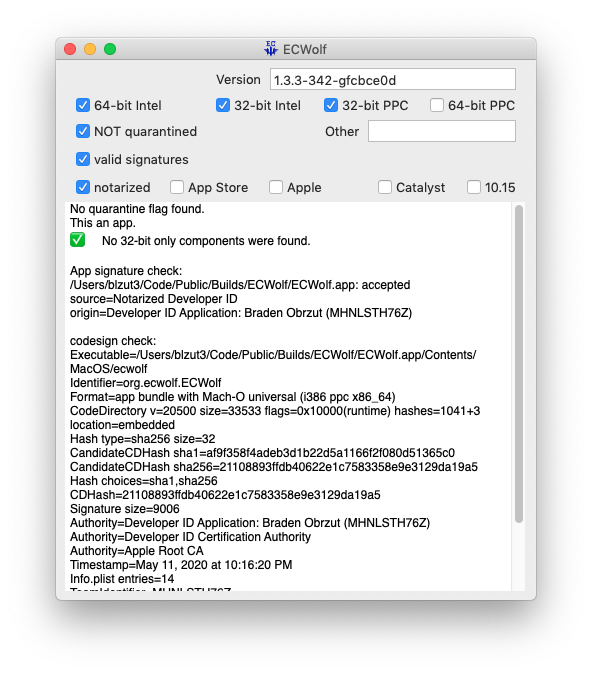

Sometimes Apple surprises you with freedom 2020-05-12 02:33:49

For all two of you that wondered, Apple will actually notarize a universal binary with PowerPC support. Actually was a bit surprised since you're supposed to use the 10.9 or newer SDK but apparently if you lipo older binaries with an x86_64 one that complies they'll be happy with that. This particular binary was built with 10.11 for x86_64, 10.6 for i386, and 10.4 for ppc.

ECWolf migrated to Git 2019-09-09 00:09:38

Following the news that Bitbucket will drop Mercurial support in 2020, I have migrated the repositories to Git. Just in case they're needed for historical reference, the old Mercurial repositories will remain with their "-hg" suffix until they're automatically deleted. Ultimately nothing should be lost since the hg-git conversion is generally pretty solid (Zandronum has been using it to sync with GZDoom), and I even wrote a script to migrate the subrepositories.

In the end this will likely be a good thing for the project since as much as I loved using Mercurial, the reality is that Git is what everyone knows and bad translation between Git and Hg has been a bit of a thorn in my side when it came to pull requests (Hg branches are not the same as Git branches). That's even putting aside the fact that Bitbucket's Mercurial support has been somewhat eroding since they added support for Git many years back. Having to no longer deal with feature omissions will be nice for me at least.

Still, I'm going to miss TortoiseHg and subrepo support that works like an end user actually expects. For the former there are a number of GUIs for Git, but so far I haven't found one that doesn't annoy me in some way. Usually the problem is that they focus on stuff that's easy to do on the command line and don't solve the problem I'm actually trying to solve which is browsing changes across history. For the latter Git has submodules, but for whatever reason they never just update the way that the user expects and require a bit of confusing manual intervention at some point or another. I guess the flip side of the way Mercurial does it is now the Hg repos are kind of hard to check out since the subrepos aren't in the location it expects them to be and errors out completely.

Already had a few people ask me why not switch to another host or self host? Essentially Mercurial is losing its equivalent to GitHub, and without it I don't have much hope for the platform to continue thriving. There are a few sites that are trying to cater to those who wish to continue using Mercurial, but I haven't seen one that will have the same "everyone can use it for free" policy that's somewhat essential these days for welcoming new programmers. Self hosting produces a similar problem, but now submitting patches becomes emailing patches. Sure it's decentralized that way, but in my experience most people are resistant to using email these days.

Then the question becomes "why even stick with Bitbucket?" Honestly I'm not sure there are any particularly great reasons, but the two that keep me inclined to stay are that I like how they have an organization feature for repos. Unlike GitHub where, yes you can have a team account, but within that team it's still a flat list ordered by modification time. Additionally, I was using their issue tracker for ECWolf and I was able to keep that by staying there. There is the downside in that GitHub is more popular, but I don't think having an Atlassian account (or sending an email) is that big of a hurdle for people to contribute.