News

WolfstoneExtract - Play Wolfstone 3D and Elite Hans in ECWolf 2020-07-25 17:59:09

Although it took me longer to get the final touches in, I'm pleased to announce that my Wolfstone extraction utility is complete! With this tool you can pull Wolfstone 3D out of Wolfenstein II or Elite Hans: Die Neue Ordnung out of Wolfenstein Youngblood.

For those who are not aware, these two games contain arcade machines with a parody of Wolfenstein 3D set in the alternate history world of the Machine Games Wolfenstein series. Effectively Wolfstone 3D is Wofenstein 3D with the roles, specifically in regards to symbolism, reversed. Elite Hans is a new set of 18 levels of dubious quality.

Conveniently, the developer chose to emulate the original engine down to even using the same wl6 file formats. This makes them easy to load in ECWolf or even in vanilla, but some subtle tweaks made required a few things to be added to ECWolf for the full experience. Thus once extracted the development version of ECWolf will be required to play (and it will detect the pk3 files along with the other supported games). It is important that I note that the extraction utility does only what it says, extracts the game into a form readable by ECWolf. It does not perform any alterations on the game data. The sound quality is horrible, but that's because the processing to make it sound like it was being played on an in game arcade cabinet was baked into the sounds. Not anything of my doing.

The tool can be found on the ECWolf downloads page. Binaries are available for 64-bit Windows and Linux since those are the platforms you can install Wolfsentein II and Youngblood on.

This tool has been made possible by the work of emoose (idCrypt), Adam "hcs64" Gashlin (ww2ogg), powzix (ooz), and the XeNTaX and ZenHAX community. Although I did a little bit of reversing myself, WolfstoneExtract is largely an assembly of those tools and knowledge into a nice integrated package.

Edit 2020-07-27: Realized my musicalias implementation was backwards so corrected extraction tool accordingly as 1.1.

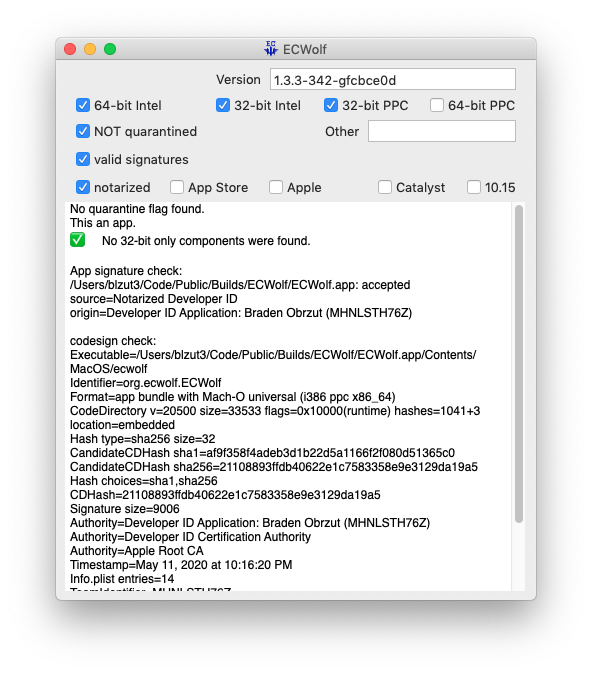

Sometimes Apple surprises you with freedom 2020-05-12 02:33:49

For all two of you that wondered, Apple will actually notarize a universal binary with PowerPC support. Actually was a bit surprised since you're supposed to use the 10.9 or newer SDK but apparently if you lipo older binaries with an x86_64 one that complies they'll be happy with that. This particular binary was built with 10.11 for x86_64, 10.6 for i386, and 10.4 for ppc.

ECWolf migrated to Git 2019-09-09 00:09:38

Following the news that Bitbucket will drop Mercurial support in 2020, I have migrated the repositories to Git. Just in case they're needed for historical reference, the old Mercurial repositories will remain with their "-hg" suffix until they're automatically deleted. Ultimately nothing should be lost since the hg-git conversion is generally pretty solid (Zandronum has been using it to sync with GZDoom), and I even wrote a script to migrate the subrepositories.

In the end this will likely be a good thing for the project since as much as I loved using Mercurial, the reality is that Git is what everyone knows and bad translation between Git and Hg has been a bit of a thorn in my side when it came to pull requests (Hg branches are not the same as Git branches). That's even putting aside the fact that Bitbucket's Mercurial support has been somewhat eroding since they added support for Git many years back. Having to no longer deal with feature omissions will be nice for me at least.

Still, I'm going to miss TortoiseHg and subrepo support that works like an end user actually expects. For the former there are a number of GUIs for Git, but so far I haven't found one that doesn't annoy me in some way. Usually the problem is that they focus on stuff that's easy to do on the command line and don't solve the problem I'm actually trying to solve which is browsing changes across history. For the latter Git has submodules, but for whatever reason they never just update the way that the user expects and require a bit of confusing manual intervention at some point or another. I guess the flip side of the way Mercurial does it is now the Hg repos are kind of hard to check out since the subrepos aren't in the location it expects them to be and errors out completely.

Already had a few people ask me why not switch to another host or self host? Essentially Mercurial is losing its equivalent to GitHub, and without it I don't have much hope for the platform to continue thriving. There are a few sites that are trying to cater to those who wish to continue using Mercurial, but I haven't seen one that will have the same "everyone can use it for free" policy that's somewhat essential these days for welcoming new programmers. Self hosting produces a similar problem, but now submitting patches becomes emailing patches. Sure it's decentralized that way, but in my experience most people are resistant to using email these days.

Then the question becomes "why even stick with Bitbucket?" Honestly I'm not sure there are any particularly great reasons, but the two that keep me inclined to stay are that I like how they have an organization feature for repos. Unlike GitHub where, yes you can have a team account, but within that team it's still a flat list ordered by modification time. Additionally, I was using their issue tracker for ECWolf and I was able to keep that by staying there. There is the downside in that GitHub is more popular, but I don't think having an Atlassian account (or sending an email) is that big of a hurdle for people to contribute.

New development builds server 2018-11-13 04:44:03

For those who aren't familiar I've been building most of the binaries on the DRD Team devbuilds site. How that came about is that people were requesting ECWolf development builds, but no one was volunteering to do them so I just setup some scripts and a VM on my Mac and it has been building (mostly) nightly for several years now. Since I already expended the effort to setup an automatic build for ECWolf I ended up absorbing most of the other builds as well.

The build Mac was a base model late 2009 Mac Mini. Shortly after it took on the build server role I upgraded it with 8GB of memory and a 1TB SSHD. Which sped things up a lot, but of course there's only so much a mobile Core 2 Duo can do. Fast forward the end of 2016 and with the release of Mac OS X 10.12 and even though the late 2009 Macbook with identical specs to the Mac Mini is supported, I end up stuck on 10.11 (yes I'm aware there are work arounds to install even Mojave on it). Between the Core 2 just not cutting it anymore by my standards and it no longer being supported by Apple it was time for a new machine, but of course this was right at the time Apple was forgetting that they even made Macs.

Now while I realize it might be a tad ironic since I buy retro computer hardware all the time, I of course couldn't bring myself to buy a 2 year old computer much less a 4 year old one. That's even ignoring the fact that the 2012 models were higher specced. But here we are in November of 2018 and Apple finally released a new Mac Mini, with non-soldered memory no less! So of course I bought one. For those who want to know I got the i7-8700B with 1TB storage, 10G Ethernet (since my NAS and main desktop are 10G), and of course 8GB of RAM since I can upgrade that later.

Here we are 9 years later and once again paying almost as much for the Mac as my desktop and getting 1/3 the power.

Now that I get to basically start over from scratch I'm taking some time to make my build scripts a little more generic. Over the years I've had people ask to see my scripts (mostly since they wanted to help fix some build issues), which wasn't really useful since they were just basic "make it work" style throw away code. This time around I want to get something I can throw up on github, not because they'll be particularly reusable by anyone but rather to provide a way to make what's actually happening more transparent.

I haven't talked about the elephant in the room yet though: Windows. When I initially setup the build server in 2012 I decided to use Windows Server 2012. The primary reason for this was I was hoping to use the server core mode to reduce memory usage, on top of Windows 8 seemingly using less memory than Windows 7. This was especially important since at the time the Mac only had 2GB of RAM. This was fine until Visual Studio 2017 came along and required Windows 8.1. What most people don't know is that Windows 8.0 and 8.1 while treated as the same OS by Microsoft are treated as separate operating systems when we're talking 2012 vs 2012R2. In other words both 2012 and 2012R2 are supported for the same period of time and there's no free upgrade between them. So everyone that was using 2012 for build servers got screwed.

Due to how slow the 2009 Mac Mini was, the fact that the builds were working, and laziness I didn't bother doing anything about it. But now that I have a new machine I need to figure out what I'm going to do for the Windows VM. I would like to try server core again given that Windows containers are a thing, we now have an SSH server on Windows, and I would get to control when updates are applied. But after being screwed over with new Visual Studio versions requiring the "free upgrades" to be applied I'm not sure this route is viable especially given the cost of Windows Server. Might end up just having to use Windows 10, which I don't really have a problem with except that the VM will be suspended most of the time so the auto updates could pose some problems with the builds reliably kicking off at the scheduled time. Still not sure what to do here.

Anyway, with all of that said to pad out this post so that it's more than a few sentences. If anyone was wondering, it's not particularly difficult to setup a PowerPC cross compiler on Mojave. Which actually surprised me since my previous setup involved using compiling and moving an install over from Snow Leopard, but it seems that the XcodeLegacy scripts got a little less quirky since the last time I ran them. Right now it's GCC 6, since that's what I had working on my PowerMac, but I've heard newer does work. Just need to try it, confirm that it works, and update the article. Update 2018/12/10: Confirmed GCC 8.2 is working!